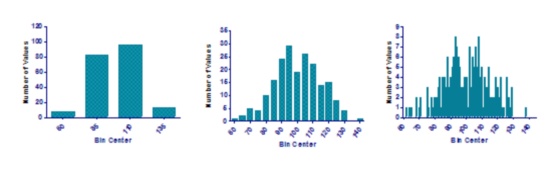

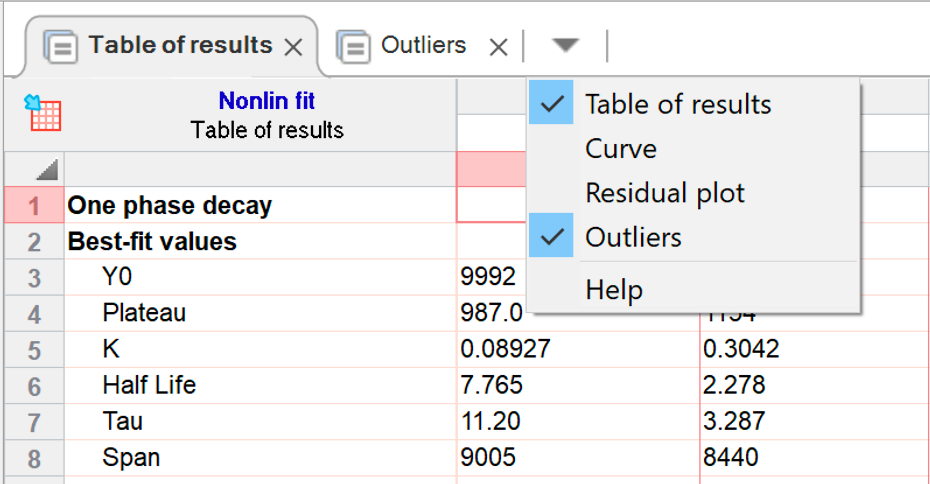

The Lorentzian distribution has wide tails, so outliers are fairly common and therefore have little impact on the fit. The widest distribution in that figure, the t distribution for df = 1, is also known as the Lorentzian distribution or Cauchy distribution. Both distributions are part of a family of t distributions as shown in Figure Figure1. Surprisingly, we were not able to find an existing method of robust regression that satisfied all these criteria.īased on a suggestion in Numerical Recipes, we based our robust fitting method on the assumption that variation around the curve follows a Lorentzian distribution, rather than a Gaussian distribution. Since we anticipate that this method will often be used in an automated way, it is also essential that the method not be easily trapped by a false minimum and not be overly sensitive to the choice of initial parameter values. It is important, therefore, that the robust method used give very little weight to extremely wild outliers. The robust fit will be used as a 'baseline' from which to detect outliers. Because the method combines Robust regression and outlier removal, we call it the ROUT method. We describe the method in detail in this paper and demonstrate its properties by analyzing simulated data sets. Remove the outliers, and perform ordinary least-squares regression on the remaining data. To do this, we developed a new outlier test adapted from the False Discovery Rate approach of testing for multiple comparisons.ģ. Analyze the residuals of the robust fit, and determine whether one or more values are outliers. Fit a curve using a new robust nonlinear regression method.Ģ. So robust fitting, alone, is a not yet an approach that can be recommended for routine use.Īs suggested by Hampel we combined robust regression with outlier detection. Moreover, as far as we know, no robust nonlinear regression method provides reliable confidence intervals for the parameters or confidence bands for the curve. Robust fitting can find reasonable best-fit values of the model's parameters but cannot be used to compare the fits of alternative models. Rather than remove outliers, an alternative approach is to fit all the data (including any outliers) using a robust method that accommodates outliers so they have minimal impact. The problem with this approach is that the outlier can influence the curve fit so much that it is not much further from the fitted curve than the other points, so its residual will not be flagged as an outlier. One option is to perform an outlier test on the entire set of residuals (distances of each point from the curve) of least-squares regression. Unfortunately, no outlier test based on replicates will be useful in the typical situation where each point is measured only once or several times. If you have plenty of replicate points at each value of X, you could use such a test on each set of replicates to determine whether a value is a significant outlier from the rest. Several formal statistical tests have been devised to determine if a value is an outlier, reviewed in. With such an informal approach, it is impossible to be objective or consistent, or to document the process. Outlier elimination is often done in an ad hoc manner. Removing such outliers will improve the accuracy of the analyses. These points will dominate the calculations, and can lead to inaccurate results. But some outliers are the result of an experimental mistake, and so do not come from the same distribution as the other points. In this case, removing that point will reduce the accuracy of the results. Even when all scatter comes from a Gaussian distribution, sometimes a point will be far from the rest. Even a single outlier can dominate the sum-of-the-squares calculation, and lead to misleading results. However, experimental mistakes can lead to erroneous values – outliers.

This assumption leads to the familiar goal of regression: to minimize the sum of the squares of the vertical or Y-value distances between the points and the curve.

Nonlinear regression, like linear regression, assumes that the scatter of data around the ideal curve follows a Gaussian or normal distribution.

0 kommentar(er)

0 kommentar(er)